Introduction

With the global data sphere estimated to reach 181 zeta bytes this year, the scope for the growth of big data remains at an all-time high. However, according to the popular quote from Spiderman, “With great power comes great responsibility,” there are bound to be a lot of challenges faced by data scientists and AI experts while handling big data. As a data science engineer, you may face some pressing challenges that could cause a roadblock in your project. Therefore, it is vital to understand the crux of the challenges faced in big data and the appropriate solutions that can be implemented.

- ● Challenges in Big Data

- ● Data Integration and Compliance

- ● Data Preparation

- ● Analysis and Processing

- ● Resource Constraints and Skill Gap

- ● Privacy Concerns

- ● Solutions for Challenges

- ● Scope of Scalability

Challenges in Big Data

Due to the sheer scale of big data and its applications across industries like finance, retail, healthcare, and transportation, there are bound to be complications and challenges in management. Therefore, there is a need for efficient storage solutions, data integration from sources, and real-time processing through customized management strategies. Here are some of the common challenges in Big Data management.

Data Integration and Compliance

Integrating data from different sources is a fairly complicated task. Merging structured data from traditional databases with unstructured or semi-structured data, and organizing data visualization methods are some of the challenges in data integration. Also, the need for implementing complex algorithms and integrating with generative AI models can be quite cumbersome, resulting in manual oversight. In some situations, this could result in inaccurate analysis and misguided decision-making, causing a significant loss. Another challenge lies in the risks in data security and compliance with GDPR. Any slip-up in this regard can cost millions in fines and tarnish the reputation of an organization.

Data Preparation

Data cleaning, transforming and enriching data to make it suitable for analysis can take up a lot of time as it is highly labor intensive. In most cases, prepping data takes up nearly 80% of the time in the project. If done incorrectly, it could cause inconsistencies, missing values, and errors. A high volume of Big Data can result in bottlenecks in performance and the need for implementing scalable solutions that adapt to fluctuating data volumes is pivotal in preventing system failures.

Analysis and Processing

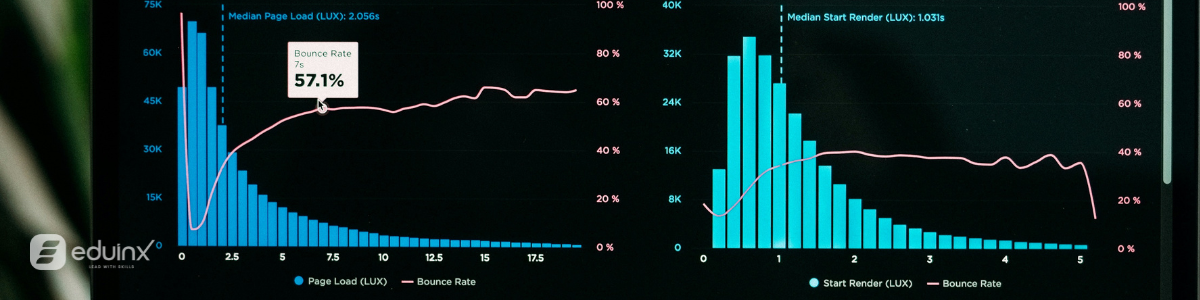

In the BFSI and trading industry, using Big Data to track stock market trends and gauge health conditions needs to be done through fool-proof processing of data and market trends. Using traditional batch processing methods is a thing of the past, therefore, data science engineers need to implement real-time analytics to overcome challenges.

Resource Constraints and Skill Gap

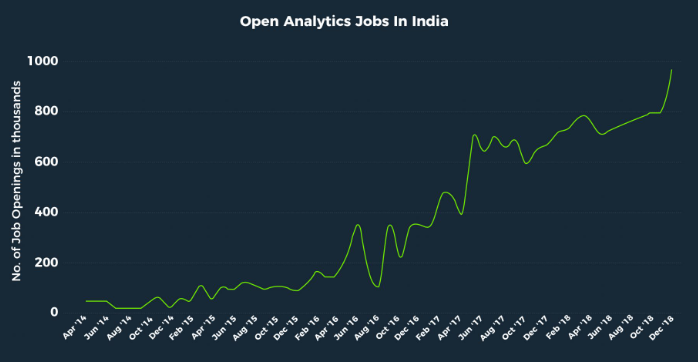

Due to the current ever-changing industry, data management demands a specific set of skills with knowledge in Big Data, Hadoop, and generative AI implementation. This gap in skills and resources can result in downtime for enterprise-level companies. Usually, small and mid-sized companies are more likely to hire fresh candidates who have performed a capstone project.

Privacy Concerns

With the rising cases of cybercrime haunting the current market, data extraction, analysis of personal information, and privacy raise some essential concerns. Being aware of data misuse, hacking, phishing, and other cyberattacks is the key. Also, ensuring that updated cybersecurity models are implemented in the system is vital.

Solutions for Challenges

Here are some solutions for the core challenges in Big Data.

- ● Data integration and quality control: Implementing state-of-the-art robust data integration platforms and data wrangling tools can help in cleaning and transforming the data automatically. Also, deploying machine learning algorithms to capture inconsistencies while continuous monitoring helps in maintaining the quality.

- ● Compliance and data security: As a data scientist and Big Data expert, you can use advanced encryption methods with strict access controls and multi-factor authentication to improve data security. Also, conducting periodic audits to ensure alignment with the regulatory frameworks and standards is the need of the hour.

- ● Improving performance: With organizations adopting new and improved processes to enhance performance, using cloud storage solutions with distributed computing frameworks can help in upscaling according to the data volumes. An established data science professional would implement containerization and virtualization technologies to improve system performance for precise and improved responses.

- ● Data preparation: With organizations looking for different ways to streamline the process and reduce manual effort, devices equipped with AI can learn from human inputs to clean data effectively.

- ● Real-time data analysis and processing: Get an instant analysis and response with real-time data processing engines and in-memory computing. A skilled data science engineer looks for ways to integrate these technologies into the existing systems, empowering businesses to take advantage of opportunities or minimize any risks as and when they arise.

- ● Bridging skill gaps: With layoffs happening left and right in the current competitive market, you can help in bridging the skills by outsourcing specific operations to niche service providers. This will help companies revamp the skills of their in-house resources without the need for layoffs.

- ● Privacy concerns: The best way to overcome this challenge in Big Data is by framing clear privacy policies and ethical guidelines. Ensure to conduct reviews and deploy quality checks wherever required.

- ● Implementing cloud-based solutions and distributed systems: Distributed systems like Hadoop and Spark are used in parallel processing and help in handling large data sets. They can be scaled up or down according to the requirements in a cost-effective manner. Cloud providers offer scalable, flexible, and secure solutions for comprehensive data management and infrastructure services.

Scope of Scalability

Now that you have understood different ways how to overcome challenges in Big Data, you need to understand the potential for scalability, with machine learning and generative AI reigning supreme in the current industry. Automating processes through data management, predictive analytics, and anomaly detection is pivotal. The best way to implement this is by designing gen AI models that best fit the current landscape. How do you do that? You may ask.

At Eduinx, our mentors are non-academicians with industry-relevant expertise in Big Data, generative AI, AI product management, and data science operations. Whether you are a professional looking for a breakthrough in your career or a fresher looking to land your first job, Eduinx is here to help you scale up in your career. We provide a holistic, hands-on experience

towards learning about Big Data and generative AI concepts. Our mentors guide you in performing capstone projects and building the right resume to land your dream job.