Introduction

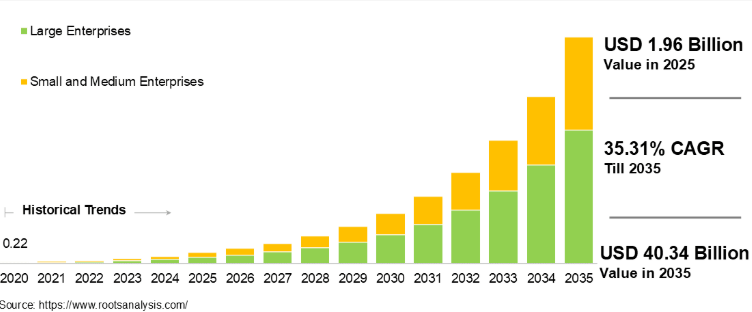

Did you know that according to a recent study, the global retrieval augmented generation market size was projected to grow at a CAGR of 49.1% from the year 2025 to 2030? With the ever-increasing scope for Retrieval Augmented Generation (RAG), you can perform a capstone project based on RAG and build the right generative AI model that is tailored to fit the core requirements of an industry/organization. This will help you grow in your career and your profile will be in the limelight of organizations that are actively hiring. If you are a budding data scientist/developer/product manager with a zeal to learn about generative AI, understanding RAG is sure to put you on a higher pedestal regardless of the industry you are working for. In this blog, you will learn the following about RAG with LLMs.

What is Retrieval Augmented Generation?

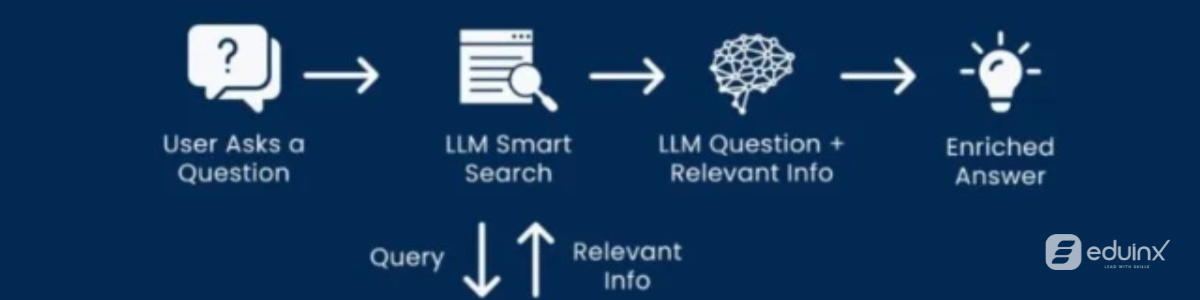

Many Large Language Models (LLMs) are known for the plethora of features that they bring to the table. However, they also have a significant number of limitations as they are prone to exhibiting biases, hallucinations, and mismatched data. LLMs are also prone to generate inaccurate and irrelevant information. So, what would you do if you were faced with a challenge when an LLM seems to fall short of expectations? That’s where retrieval augmented reality comes into play. As a framework capable of making LLMs highly reliable with accurate and relevant information, RAG enables developers to use different approaches to resolve LLM’s challenges. RAG can be implemented in LLMs to improve text generation with real-time data retrieval. RAG does not depend on pre-trained knowledge, instead, it allows models to search external databases or documents during the generation process, resulting in an appropriate response. RAG does the following.

- ● Retrieval: Obtains the relevant data from appropriate external sources based on the user’s prompt

- ● Generation: This data is processed, and an appropriate response is generated

RAG Architecture

Now that you have understood what RAG is, it is time to delve into the eight important types of RAG architecture that you need to know.

Simple RAG

As the name suggests, a simple RAG is the most basic form of RAG where the language model retrieves relevant documents from a static database in response to the user’s query. This RAG architecture is best suited for handling small databases such as FAQ systems or customer support bots where the response needs to be factually accurate.

Simple RAG with memory

This architecture comprises a simple RAG with a storage component that enables the model to retain information from prior interactions. This is widely used in chatbots as models need to remember the user’s preferences according to prior interactions and provide appropriate results.

Branched RAG

If an LLM needs to handle complex queries that demand the need for specialized knowledge such as in-depth research, or legal tools, implementing a branched RAG architecture is ideal. Branched RAG empowers users to deploy a flexible approach to data retrieval by determining which specific data sources need to be queried based on the input.

HyDe

Hypothetical Document Embedding or HyDe is a unique RAG architecture that produces hypothetical documents based on the query before retrieving relevant information. This is particularly useful in research and development areas where queries could be vague. HyDe can also be used in the field of creative content generation as it requires imaginative outputs that are flexible.

Adaptive RAG

This is a dynamic implementation type RAG architecture that adjusts its retrieval strategy based on the nature of the query. It has a unique ability to alter its approach towards response based on real-time user inputs. Adaptive RAG is commonly used in enterprise search systems.

CRAG

Corrective RAG or CRAG architecture uses self-reflection or self-grading mechanisms on retrieved documents to improve the accuracy and coherence of generated responses. This does not function like a traditional RAG model as it evaluates the quality of information retrieved before generating an output. CRAG is widely used in applications that demand high factual accuracy like document registration, financial analysis, or medical diagnosis.

Self-RAG

Self-RAG is a very reliable model that autonomously generates retrieval queries during the generation process which is suitable in exploratory research for long-form content creation.

Agentic RAG

This model is like an agent as it performs complicated multi-step tasks by proactively interacting with multiple data sources or APIs to gather information. It is great at synthesizing information from various sources. This model is used in automated research, multi-source data aggregation, or executive decision support.

RAG in NLP

Now that you have understood the key architectures of Retrieval Augmented Generation (RAG), you need to learn how RAG is used to enhance the quality and relevance of the generated text. RAG and natural language processing can be combined to improve the results generated by generative AI models. These models are used in e-commerce, supply chain, banking, legal, and healthcare applications.

RAG vs Traditional Language Models

RAG uses external knowledge retrieval which cannot be done by a traditional language model. This results in more context-aware and up-to-date responses. LLMs depend on their pre-trained knowledge, while RAGs can access an external database or APIs for information seamlessly. This makes RAGs stand out from a traditional language model.

Learning about Retrieval Augmented Generation (RAG) to become an established professional in generative AI is the key to becoming an industry expert and a thought leader. However, learning this complex concept can often be quite challenging. At Eduinx, you can understand the core concepts of RAG along with its real-time applications in the current market. With our non-academic mentors, you can get a good grip of complex concepts deep learning with LLMs, RAG implementation according to the industry, and other core applications in the market. Building a concrete foundation in generative AI and learning relevant programming languages can be made

easy through our hands-on mentorship and capstone projects. You can also get a 360-degree career support from us, and we help you craft your profile and guide you on how to handle those tricky interview questions. Bag your dream job with Eduinx.